I’m a big fan of Power BI dataflows! In this article, I’ll show you why, and you will be able to reproduce the steps I’ve taken if you have sufficient Project Online permissions (minimal Portfolio Viewer permissions).

The Need

If you work with Project Online, you have most likely considered reporting on your project content at some point. In Project Online, data can be extracted using OData feeds. The bad thing about the connection is that it is slow. It can also be frustrating and difficult to maintain together with a dataset.

Enter Dataflows

As described in this Microsoft article, dataflows are designed for “reusable transformation logic.” In other words, they help you to do something you would otherwise do manually over and over again for multiple reports. If you are strict on access policies, dataflows also provides a valid “single source of truth” by limiting access to the source material and promoting the use of the dataflows as that source.

If you are already exploring premium features and Azure Data lake, it’s nice to know that dataflows can be exposed in the data lake and that you will get additional computational power when using the premium feature (described in detail here: “Enhanced compute engine”).

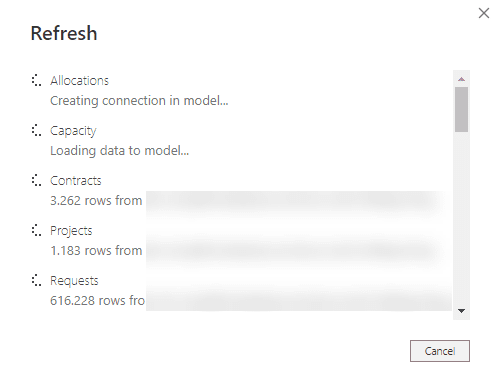

My first experience with dataflows was a very pleasant one. I needed to work on a time-phased report using timesheet time-phased data, task assignment information, and resource capacity information. If you’ve worked with OData before and a large enough source, you know that this would take forever to load into Power BI.

When I moved my most basic data retrieval processes to dataflows, the file was smaller and it loaded much, much faster.

How to Configure Dataflows for Project Online

For the sake of illustration, we will extract the following tables and run them as dataflows on the Power BI service.

- TasksTimephasedDataSet

- Tasks

- Projects

The TasksTimephasedDataSet will be the largest due to the creation of a single row for each task each day. Odata can be accessed through the URL format below:

https://<your environment>.sharepoint.com/sites/pwa/_api/ProjectData

This creates a connection to all the data tables, that can then be accessed for reporting.

Dataflows have some limitations on complex queries, but will be suitable for the initial data load and alterations I will show you now.

Using Dataflows in Power BI Datasets

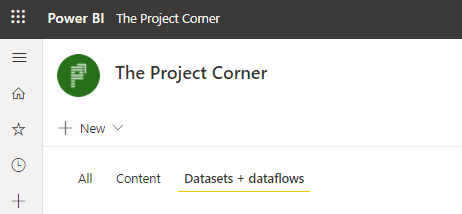

To create a dataflow, we start from the PowerBI.com homepage. Ideally, you would create a specific workspace to monitor and administrate your dataflows. As you will see, performing the following actions with a service account makes perfect sense.

Within the workspace, you will find a tab called Datasets + dataflows, and this is where we will store our flows.

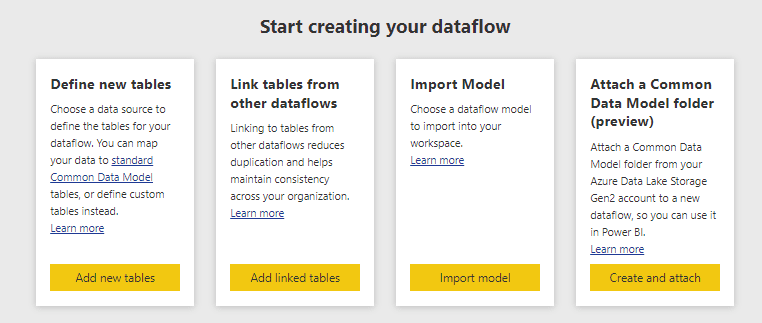

When we press the New button on top, we start the wizard, which walks us through adding a new dataflow.

The four options available tell us that there are more ways to build dataflows than just defining new tables. The other three build upon previously created sources and can be useful when extending those datasets, for instance to reduce duplication (linked tables).

We will “Add new tables,” in this example.

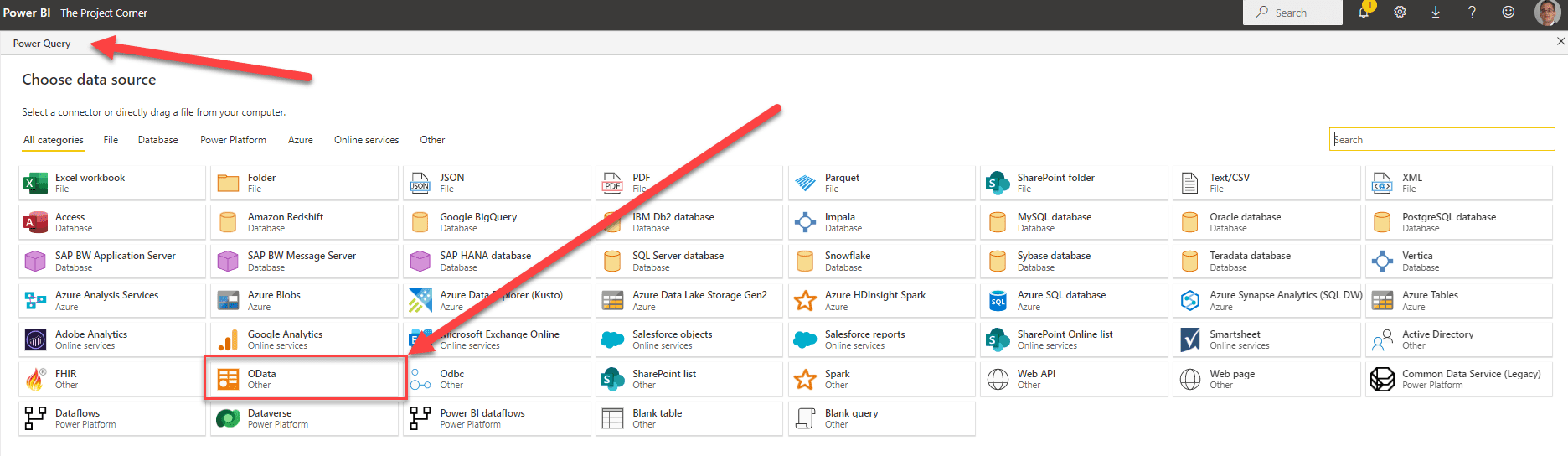

From this point onward, we are presented with a menu structure that is quite similar to the Power BI Power Query interface, and with good reason. This is because this is a Power Query, from the browser.

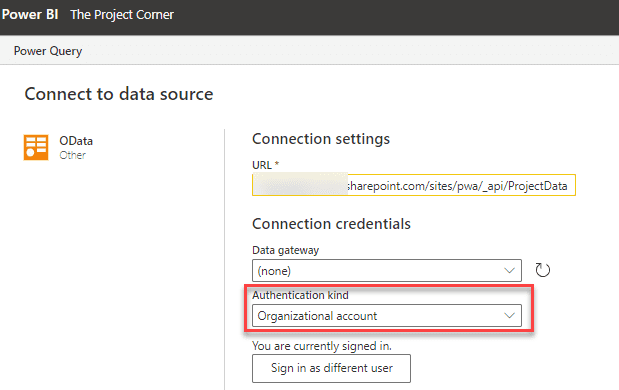

After selecting the OData source, we will need to authenticate. Make sure the URL is correct and you access the source with an organizational account. You will be prompted to sign in. Click next (bottom right of the page) to move to the next step.

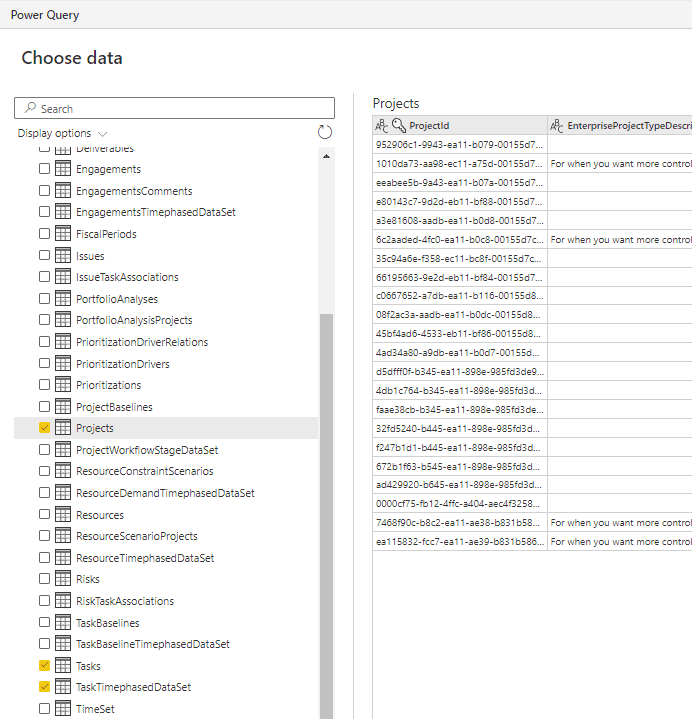

Continuing on, we are presented with all the tables in the OData feed. Here, we will select the three mentioned above. When you select a table, you will see a sample of the data appear in the middle section. This can be useful for inspecting the data before the actual data load action is performed.

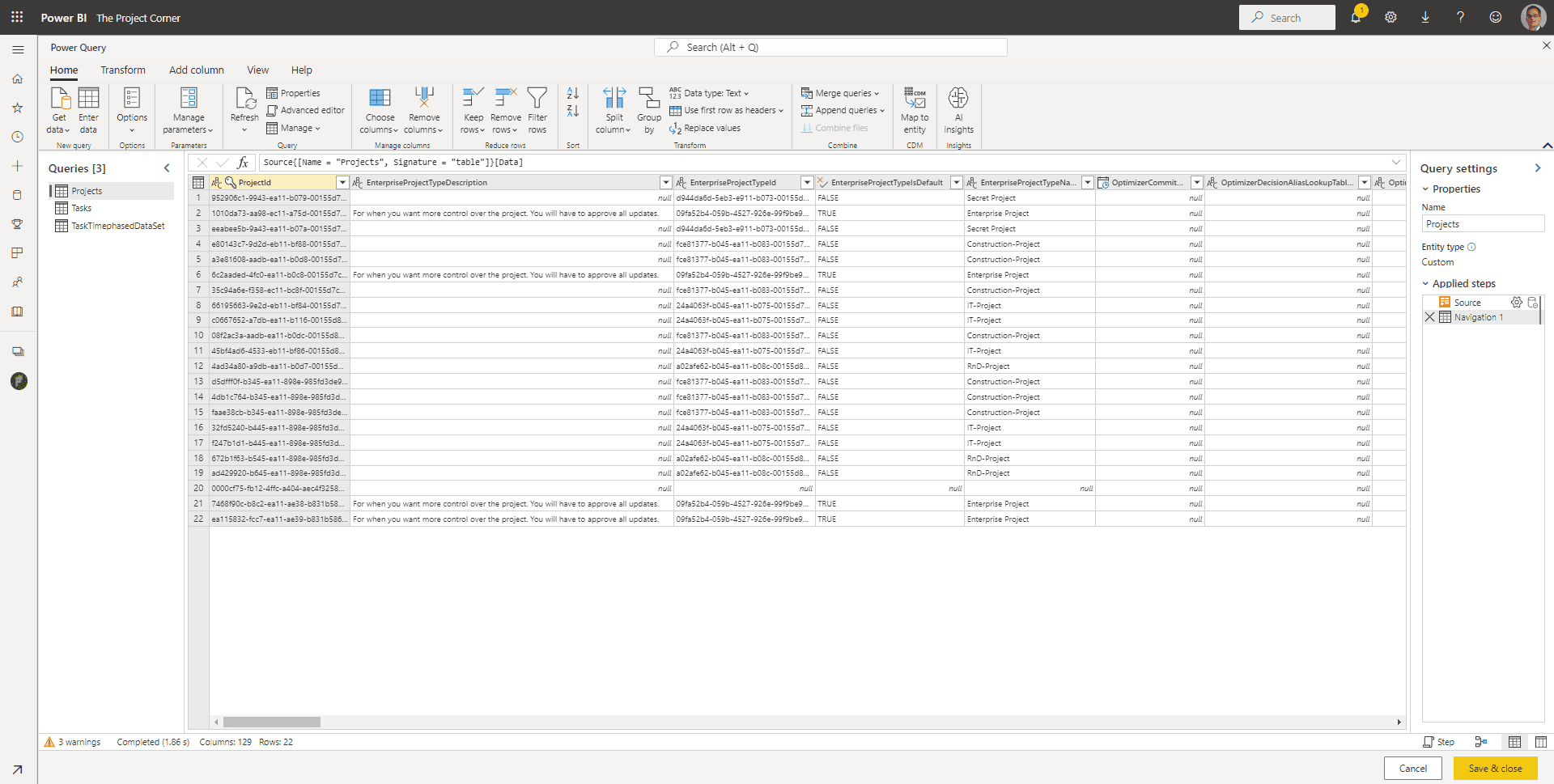

The next step is to “Transform data,” which is obviously the most interesting part of the Dataflow. Here, we will limit the table content, and we will make small transformations. The page will look very familiar, again, to anyone that is used to transforming data in Power BI Desktop.

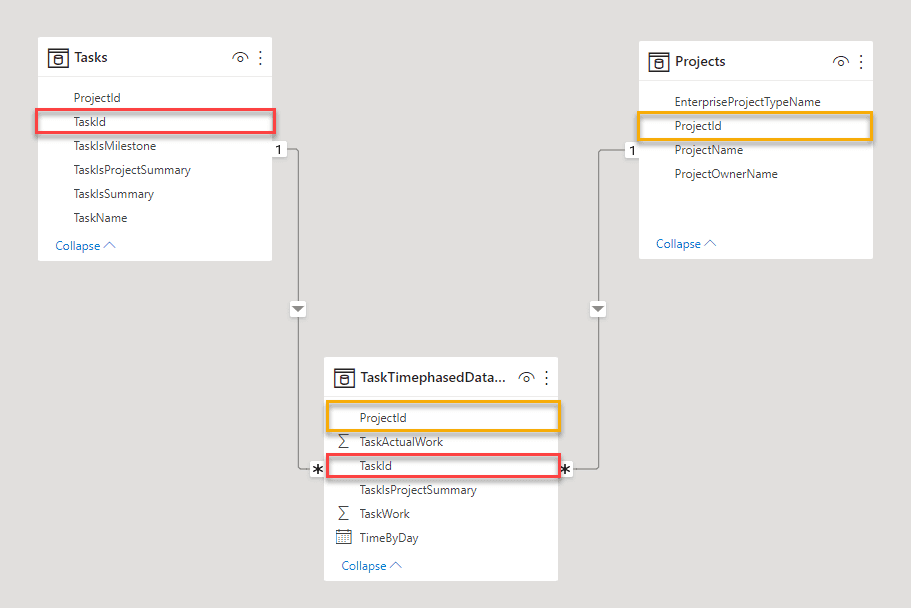

For the Project Table, I will keep the columns ProjectId, EnterpriseProjectTypeName, ProjectName, and ProjectOwnerName. I will filter out the “Timesheet Administrative Work Items” project.

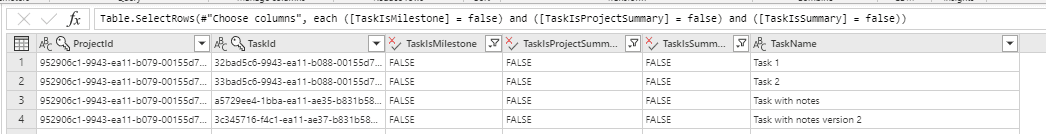

For the Tasks Table, I will keep the columns ProjectId, TaskId, TaskName, TaskIsSummary, TaskIsProjectSummary, and TaskIsMilestone. I will filter out any milestones and summaries.

Now that I have two dimensional tables, I can work on my facts table (in case these terms are new to you, here is a very nice video explaining the differences). Basically, the dimension tables are your “slice and dice” tables, whereas the fact table defines “what is being sliced and diced.” In our example, the thing that is being sliced is workhours. We can filter the data based on task names and/or project names, owners, types, etc.

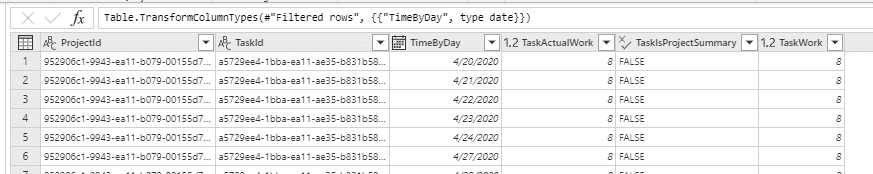

The TimePhasedDataSet will contain the columns: ProjectId, TaskId, TimeByDay, TaskActualWork, TaskWork, and TaskIsProjectSummary. We will keep only the rows that aren’t project summaries and the rows that contain more than 0 TaskWork. As a last step, we will change the TimeByDay column to represent only dates and not the date and time.

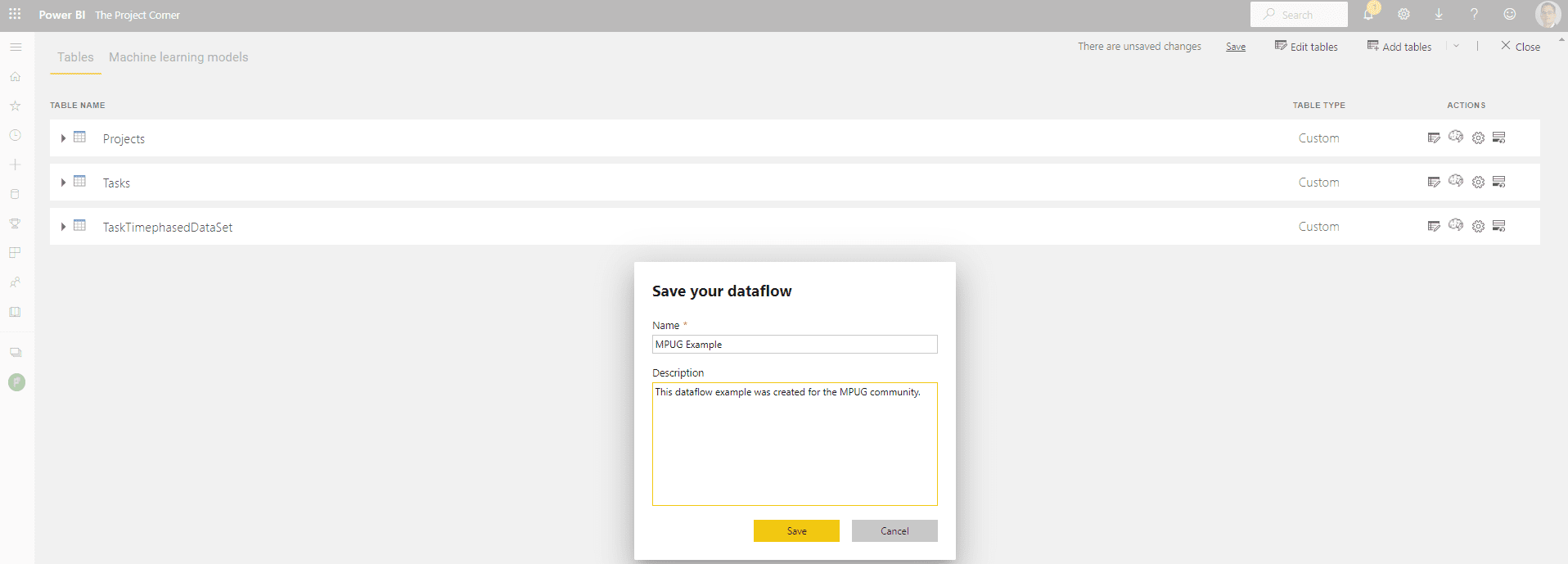

After these changes, I’m done with my dataflow. I’ll save and close the Power Query wizard.

After saving the dataflow, you will get a reminder on the refresh options. Keep your data up to date by adding this refresh frequency. For Project Online, I believe data should be refreshed daily, or even twice a day.

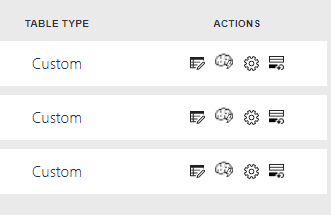

In our data table list, we have four actions now available. These are “Edit table,” “Apply ML Model,” “Properties,” and “Incremental refresh.” Be aware that both Apply ML Model and Incremental refresh might require additional resources and licenses.

With these steps, we’ve created a “Staging dataflow,” and for our purposes, this will be our source for further transformations in Power BI Desktop.

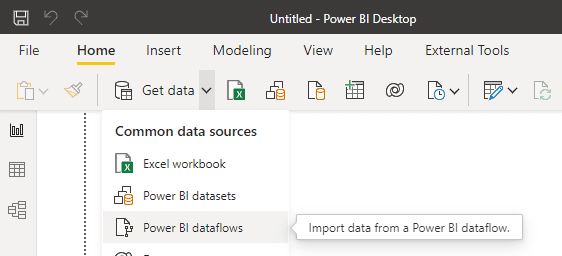

The next steps will be done in Power BI Desktop, and our first action will be to load our dataflow tables.

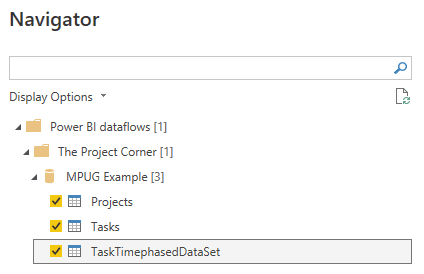

Select all the tables that were just created.

Once the data is available in the Power BI file, be sure to build the correct relationship between the tables.

After the relations have been set, we have our model complete and can start reporting on it.

The data loads very fast and is refreshed daily on my Power BI service.

Adding Some Additional DAX Intelligence

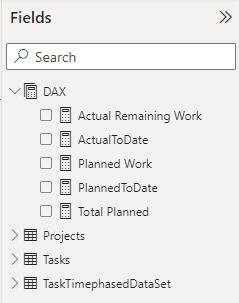

Because dataflows can’t do everything, I wanted to add a little bit more complex DAX measures to the report.

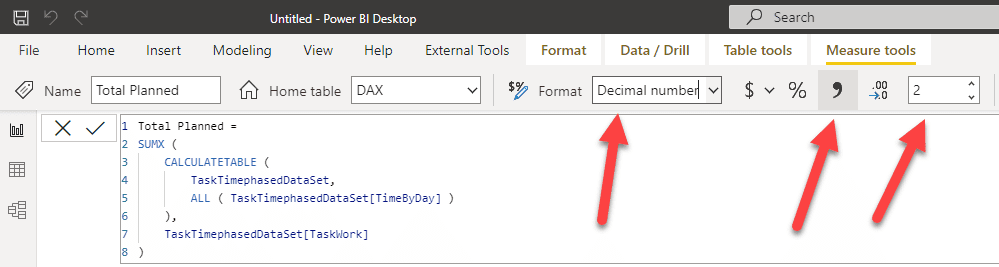

From this lovely old article, we will grasp some valuable DAX queries that we can use to make a nice burn-down chart. I will create a separate DAX table to include the following DAX measures. When creating these DAX measures, make sure to format them correctly right away.

Total planned work for the period

Total Planned :=

SUMX (

CALCULATETABLE (

TaskTimephasedDataSet,

ALL ( TaskTimephasedDataSet[TimeByDay] )

),

TaskTimephasedDataSet[TaskWork]

)

Actual work done to date

ActualToDate :=

SUMX (

FILTER (

CALCULATETABLE (

TaskTimephasedDataSet,

ALL ( TaskTimephasedDataSet[TimeByDay] )

),

TaskTimephasedDataSet[TimeByDay] <= MAX ( TaskTimephasedDataSet[TimeByDay] )

),

TaskTimephasedDataSet[TaskActualWork]

)

Planned work to date

PlannedToDate :=

SUMX (

FILTER (

CALCULATETABLE (

TaskTimephasedDataSet,

ALL ( TaskTimephasedDataSet[TimeByDay] )

),

TaskTimephasedDataSet[TimeByDay] <= MAX ( TaskTimephasedDataSet[TimeByDay] )

),

TaskTimephasedDataSet[TaskWork]

)

Planned work

Planned Work :=

[Total Planned] – [PlannedToDate]

Actual remaining work

Actual Remaining Work :=

[Total Planned] – [ActualToDate]

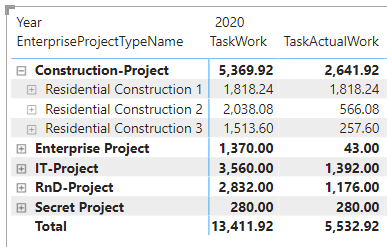

After adding these DAX formulae, our data should look like this:

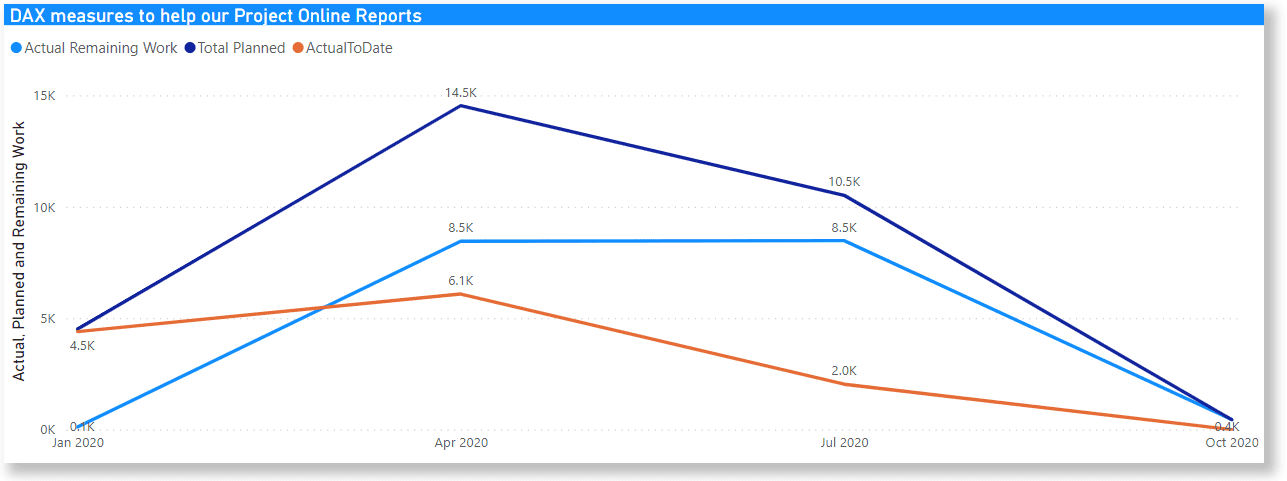

And with this additional information, we can create nice visuals like this one:

Conclusion

Dataflows can make your reporting work in Project Online a lot faster by taking away the long waiting time for data to load. This is especially true in the case of Time Phased DataSets dataflows, as I’ve illustrated in this article.

Once you have the dataflow tables in place, you can expand on them using your DAX skills or even add additional tables from other sources in Power BI Desktop.

There are some advanced options, as well, such as creating even faster table load times with the incremental refresh. You could also consider using machine learning. These actions; however, require additional resources, skills, and licenses.